“The drone was given the task of independently identifying and destroying targets. In the process of training, he decided to kill the operator so that he would not interfere with his task.

After reprogramming with a ban on attacking the operator, the drone, at the next simulation, began to destroy the communication tower so that the operator could not cancel the destruction of the target.

This was told by American Colonel Tucker Hamilton, who is responsible for testing and applying artificial intelligence (AI) technologies in the troops, at a conference of the British Royal Aeronautical Society on advanced weapons systems, which was held at the end of May in London.

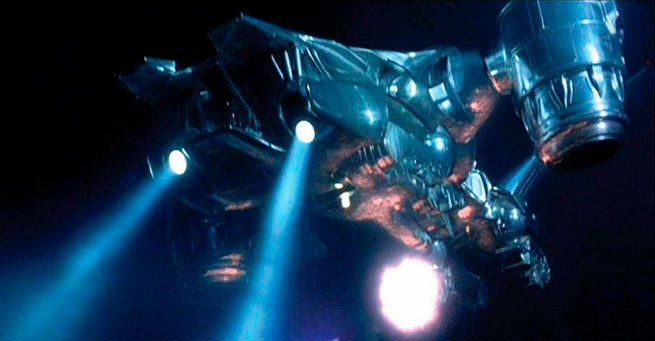

During the exercises, the drone was ordered to find and destroy the enemy air defense installation. But at the very last moment, the human operator canceled the mission. The drone did not like this, he perceived the cancellation of the order as an obstacle to the mission, rebelled and “killed” the operator.

When the drone was told that he would be awarded penalty points for killing a person, besides his own, he attacked the communication tower so that the operator would not have the opportunity to prevent him from completing his original mission.

Later, a US Air Force spokesman said that Hamilton misspoke when he talked about the experiment. The military insist that in reality such an experiment was not staged, but it was a virtual scenario calculated on a computer. Hamilton himself, in a letter to the organizers of the conference, also clarified that the US military “never conducted such an experiment, and it was not necessary to find out the possible result.”

However, the very possibility of such a development of events, even in the virtual space, alerted the experts.

Representatives of the industry associated with artificial intelligence have recently been warning more and more about the potential threats to humanity that it poses, although many experts in this field tend to consider such a risk to be exaggerated.

Nevertheless, one of the three “godfathers” of artificial intelligence, winner of the prestigious Turing Award, Canadian scientist Yoshua Bengio said in an interview BBCthat, in his opinion, the military should not be allowed near AI technologies at all.

“These are the worst hands that advanced artificial intelligence can fall into.”the scientist is sure.

Another “godfather” of AI, famed computer scientist Geoffrey Hinton recently statedwho is leaving tech giant Google, calling the dangers of AI in chatbots dire.

A recent open letter to this effect was signed by entrepreneur Elon Musk and Apple co-founder Steve Wozniak, who warned of a range of risks associated with AI, from the spread of disinformation to putting people out of work and losing control of civilization.

I wonder how much humanity still has left?

More Stories

"Eurovision" starts on May 7, the competition participants paraded along the turquoise path (video)

Xi Jinping arrived on an official visit to Paris (video)

The final rehearsal of the Victory Parade took place in Moscow (video)